ICIP 2023

30th IEEE International Conference on Image Processing

GRAND CHALLENGE SESSION

Infrared Imaging-based Drone Detection and Tracking in Distorted Surveillance Videos

For queries regarding the challenge, contact us at: icip2023irdrone@gmail.com

INTRODUCTION

The proliferation of Unmanned Aerial Vehicles (UAVs) such as drones has caused serious security and privacy concerns in the recent past. Detecting drones is extremely challenging in conditions where the drones exactly resemble a bird or any other flying entity and are to be detected under low visibility conditions - during the night, in hilly regions, in populated urban areas, in dense forest areas and in most cases the drones that are exceedingly far off from the field of view (FoV) of the surveillance cameras. In such cases of detecting drones under low visibility, infrared imaging outperforms RGB imaging since the images in the former case are based on the differences in temperature measurements and they help identify and track objects that are otherwise not visible in images from conventional cameras. In high-level tasks such as object detection and object tracking, the perceptual quality of surveillance videos plays a pivotal role. The major limitation is that the surveillance videos acquired are often severely distorted due to external environmental factors. The quality deteriorates even further when the drones to be identified and tracked are far off and well beyond the FoV of the surveillance camera. The dataset proposed consists of infrared images of drones and birds under different challenging scenarios as stated above. The real challenge lies in identifying and tracking drones under extremely low visibility conditions, with various levels of distortions in surveillance videos and at the same time draw conclusions on the position of the drones with respect to the FoV of the source surveillance camera.

SIGNIFICANCE OF THE CHALLENGE

One of the most important aspects of identifying and tracking drones is for defense and military applications when the UAVs of the enemy have to be identified with very low latency and tracked efficiently to adopt precautionary measures. Drones used for military purposes are usually camouflaged to prevent the enemy from locating them.

Military operations are usually carried out in hilly regions, forests, and areas with extreme weather conditions, and identifying drones under such conditions is highly challenging where the surveillance videos are often distorted due to topographical conditions. Hence, this proposed challenge aims to solve this existing problem that would immensely aid the armed forces of the nation in maintaining a stringent security system.

The proposed challenge also aims to provide a solution for tracking the trajectory of the drones and derive conclusions on whether they are approaching or receding a particular FoV along with detecting them in extremely low visibility conditions. Such conclusions and whereabouts of the trajectory of the drone would benefit the defense personnel.

RULES FOR PARTICIPATION

- The dataset will be made available to the registered participants.

- The proposed solutions must be able to detect and track drones in real-time infrared videos for each scenario considered in the dataset. A detailed description of the dataset along with a summary of each scenario considered will be provided with the dataset. Annotations for the training set will be made available along with the release of the dataset. The participants are required to submit the code of the proposed algorithm (preferably Python) with necessary comments.

- As a part of the submission, teams are required to submit a short document/report summarizing their approach and algorithms. A demo script also needs to be submitted that can be used to run the solution for a test video for further evaluation purposes. The participants must also share the inference time of their code (which is also an evaluation metric) and the system specifications on which it was implemented. The proposed solution must meet the following criteria:

The model must be able to identify drones and differentiate them from other flying entities in real time infrared videos under extremely low visibility conditions and under different scenarios as considered in the dataset. (Details of the dataset is provided in the section below).

The proposed solution should be able to track the trajectory of drones under various topographical conditions including distortions that are considered in the dataset and must be able to make conclusions on whether the drones are approaching or receding from the FoV of the camera source.

The model must be able to detect the type of distortions in the infrared surveillance videos and report the same during inference. A brief description of different distortions will be made available with the dataset.

Evaluation Criteria

The algorithms proposed by the participants would be tested on various test cases of surveillance videos. The following are the evaluation metrics to be considered.

- Inference Time – faster the inference, higher the model will be scored which will be based on the frames per second (fps) reported by the teams.

- Classification Metrics: Accuracy, F1 score, Precision, Recall, Average IoU Score, Average Class Loss, Mean Average Precision (mAP) score. (Note: mAP score will be given higher preference)

- Accuracy in detection of distortion type, tracking of drones and conclusions on the position of the drone from the FoV of the source camera (approaching or receding)

Important note: The proposed algorithm that exhibit higher accuracy in cases when the drones are extremely small, far off and with various distortions in the surveillance videos will be given higher weightage. Benchmark values will be released along with the release of the test data and the teams outperforming this benchmark would be considered for the awards.

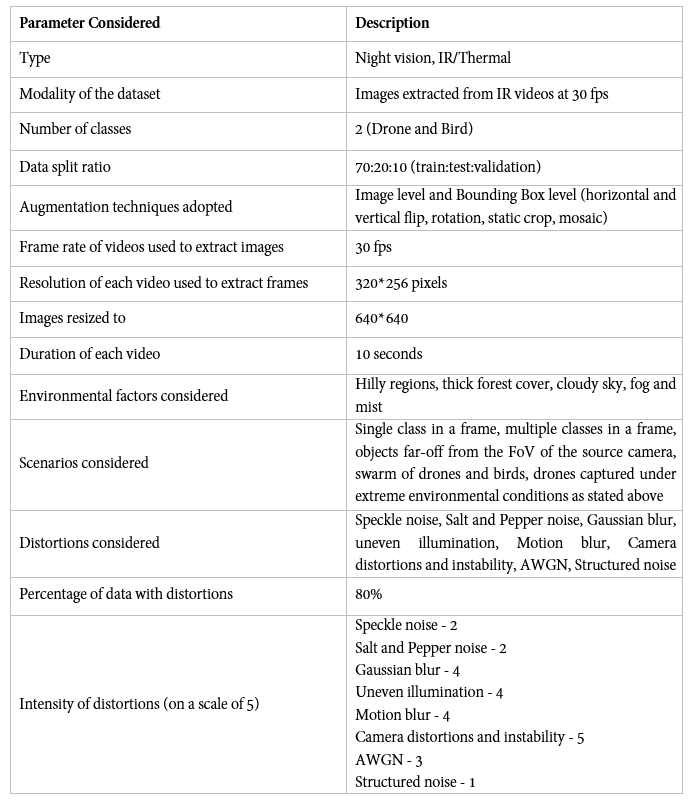

Dataset Details

The training data will be released to the registered participants on the mentioned date (February 10, 2023).

Each registered team will receive a link to a zipped folder that will contain the following:

1. A folder containing images of drones and birds along with text annotations in YOLO format.

2. A file containing the type of distortion corresponding to each image in the image folder.

3. A folder containing RGB images of drones and birds. Using this data is optional. Participants can design algorithms for RGB to Infrared conversion or use multi-modal fusion methods to train their models. (Note: Teams not using this data will not be penalized in terms of scoring their solutions)

4. A document containing a brief description of the distortions and scenarios considered.

SUBMISSION Details

Each participating team must submit their solutions in the following format.

Each team is required to email their solution in a zipped file to icip2023irdrone@gmail.com with the following specifications:

- File naming format: TeamName_IRDrone_ICIP2023

- Email Subject: ICIP 2023 Challenge Submission

Each zipped file will contain the following items:

- A Jupyter/Colab notebook containing the complete implementation with appropriate comments.

- The notebook must contain the complete specification of the algorithm used for detection of drones and birds in images/videos, tracking of drones, detecting the type of distortions and a conclusion on whether a drone at any instant is approaching or receding from the FoV of the surveillance camera.

- A demo code must also be submitted that can be executed on a test video for evaluating the submitted solution. The algorithm must be designed in such a way that the the confidence score of detection, type of distortions and the position of the detected drone (approaching/receding) must be displayed along with the bounding box output.

- The trajectory of the detected drone/bird must also be reported as a part of the demo code. The algorithm for tracking can either be submitted separately or a single algorithm can be submitted for detection + tracking.

- The zipped file must also contain a short description/report summarizing the entire approach and implementation details.

ORGANIZING TEAM

Dr. S Sethu Selvi

Professor

Department of ECE, Ramaiah Institute of Technology,

India

Dr. Raghuram S

Associate Professor

Department of ECE, Ramaiah Institute of Technology,

India

Dr. Sitaram Ramachandrula

Senior Director, Data Science,

[24]7.ai

India

Dharini Raghavan

Student

Department of ECE, Ramaiah Institute of Technology,

India

Vishnu R Rao

Student

Department of ECE, Ramaiah Institute of Technology,

India

Srinidhi A V

Student

Department of ECE, Ramaiah Institute of Technology,

India

IMPORTANT DATES

Registration opening and launch of challenge website – January 25, 2023

Release of Training Data – February 10, 2023

Availability of Test Data – February 20, 2023

Submission Open – February 25, 2023

Registration closes - February 25, 2023

Submission Ends - March 15, 2023

Announcement of top three teams - March 24, 2023

Challenge Paper Submission (Camera Ready)– April 26, 2023

Announcement of Winners – ICIP 2023

AWARDS

- The top three teams will be invited for a presentation of their solution in a dedicated session at ICIP 2023 and will also have the opportunity of contributing to a paper summarizing the challenge outcomes which will be submitted to the ICIP 2023 proceedings.

- The organizers will contact potential sponsors for supporting 1-3 awards for the best performing teams.

REFERENCES

[1] Svanström F. (2020). Drone Detection and Classification using Machine Learning and Sensor Fusion

[2] Svanström F, Alonso-Fernandez F and Englund C. (2021). A Dataset for Multi-Sensor Drone Detection

[3] https://github.com/DroneDetectionThesis/Drone-detection-dataset

[4] Al Dahoul N, Abdul Karim H, Ba Wazir A S, Toledo Tan M J, Ahmad Fauzi m F, “Spatio-temporal Deep Learning Model for Distortion Classification in Laparoscopic Video”, 10:1010, F1000 Research, 2021

[5] Yuan, S et al., IRSDD-YOLOv5: Focusing on the Infrared Detection of Small Drones. Drones 2023, 7, 393

[6] H. V. R et al., "Detection and Classification in Ballistic Drone Capturing Based on Transfer Learning Networks Using Infrared Images," 2022 IEEE 6th Conference on Information and Communication Technology (CICT), Gwalior, India, 2022, pp. 1-5

Many thanks to their great work!

CHALLENGE OUTCOMES

The challenge witnessed an overwhelming participation from 12 countries across the globe with over 30 participating teams! The following teams demontstrated remarkable outcomes and presented innovative solutions.

First Place - Team IRD_YOLOv8_MOT (WINNERS)

Mr. Vinayak Nageli, CAIR, DRDO, Bangalore

Dr. Arshad Jamal, CAIR, DRDO, Bangalore

Mr. Arun Kumar, IIT, Tirupati

Prof. Rama Krishna Sai S Gorthi, IIT, Tirupati

Second Place - Team SPIN (RUNNERS UP)

Yann GAVET, Ecole des mines Saint-Etienne

Abdelouahid BENTAMOU, Ecole des mines Saint-Etienne

Ossama MESSAI, Ecole des mines Saint-Etienne

Abbass ZEIN EDDINE, Ecole des mines Saint-Etienne

HEARTY CONGRATULATIONS TO THE WINNERS!!

SCORE TABLE

METRICS | TEAM IRD_YOLOv8_MOT | TEAM SPIN |

mAP | 97% | 92.6% |

Precision/Recall | 98%/89% | 94.2%/94.2% |

Distortion Classification Accuracy | 93% | 92% |